Introduction: In our recent project, we aimed to enhance the interpretability of machine learning models by integrating SHAP (SHapley Additive exPlanations) and MIR (Model Influence Regions). This project was a collaborative effort, focusing on plotting and analyzing the impact of kernels on model outputs.

Project Details:

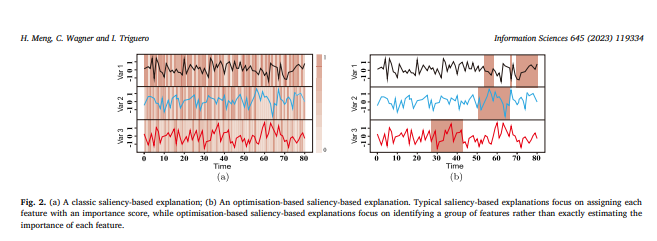

- Objective: To plot the influence of kernels on the input time series, both with and without SHAP, as per the guidelines provided in a referenced article.

- Tasks:

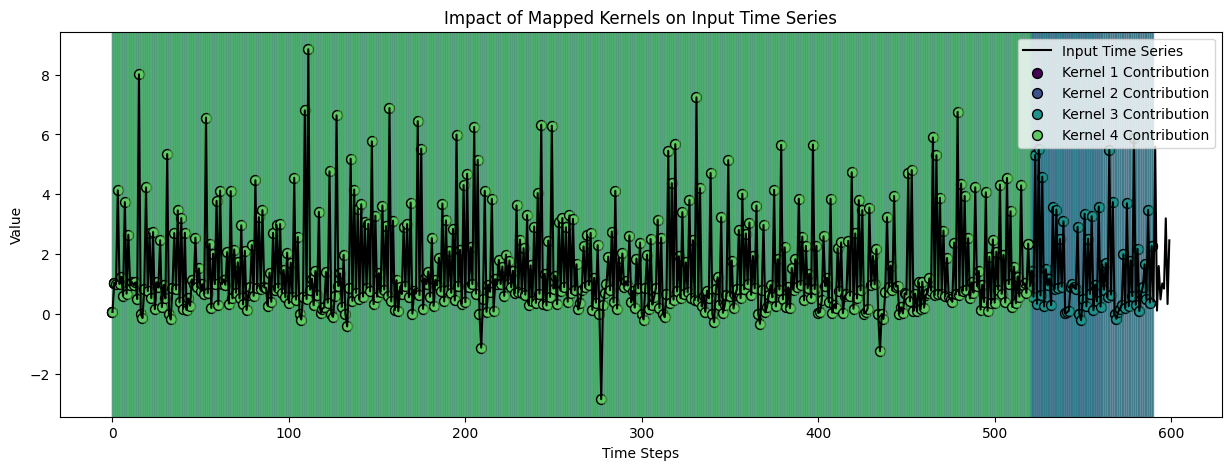

- Implemented plotting for kernel influence zones, highlighting where specific kernels impact the input time series.

- Visualized overlapping influences to show regions affected by multiple kernels.

- Mapped kernel influence on the temporal dimension (x-axis) and input series values (y-axis).

Challenges:

- Ensuring the accuracy of plots according to the article’s specifications.

- Balancing the budget constraints while delivering high-quality visualizations.

Achievements:

- Successfully plotted kernel influence zones, providing clear visual insights into model behavior.

- Enhanced model interpretability, aiding in better understanding and trust in machine learning models.

- Completed the project within the stipulated budget and time frame, receiving positive feedback from the client.

Conclusion: This project underscores the importance of model interpretability in machine learning. By leveraging SHAP and MIR, we were able to provide valuable insights into model behavior, ultimately contributing to more transparent and reliable AI systems.

Enhancing Model Interpretability with SHAP and MIR